What Is Duplicate Content?

Duplicate content refers to identical or substantially similar content that appears in more than one place, either within a single website or across multiple websites. Simply put, it is content that appears on more than one page or URL.

This can include verbatim repetition of text, images, videos, or any other type of content found on different pages within your website or among other websites and yours.

Duplicate content can be unintentional, such as when multiple URLs on a site lead to the same content, or it can be deliberate, like when someone copies and pastes content from one source to another. Whichever it is, when you find your website has this issue, it is important to correct it immediately, as it can have quite a negative impact on your website’s SEO.

How Does Duplicate Content Affect SEO?

Search engines, such as Google, aim to provide users with diverse and relevant search results. To achieve this, they try to identify and filter out duplicate content, as displaying multiple identical or highly similar results may not be helpful for users.

In the context of SEO (Search Engine Optimization), having duplicate content on your website can lead to issues such as:

-

Search engine confusion: Duplicate content confuses search engines, making it challenging for them to prioritize a single version. This confusion can lead to lower rankings for all instances of the content.

-

Ranking dilution: Instead of consolidating ranking signals for a piece of content, duplicates can spread signals across multiple versions, resulting in lower individual rankings and reduced overall impact.

-

Crawling and indexing issues: Duplicate content wastes search engine resources, causing inefficient crawling and indexing. This can slow down the discovery and indexing of new, relevant content.

-

Penalties for manipulative behavior: Intentionally creating duplicate content to manipulate rankings can lead to penalties from search engines, affecting a website's visibility and credibility.

-

Poor user experience: Duplicate content creates a suboptimal user experience, potentially leading to frustration and lower user satisfaction, impacting metrics like bounce rates.

-

Backlink fragmentation: Duplicate content across different URLs can fragment backlinks, reducing the overall impact of backlinks on a specific piece of content and affecting its authority.

How Did It Happen?

The most common causes of duplicate content and how they occur include the following:

URL Problems

A common cause of duplicate content on websites is the website creator not fully understanding the concept of URLs. The website’s URL is the unique address of a webpage pointing it to the location of the webpage on the web. If there is a variation of a specific URL, this can lead to duplicate content issues.

URL Parameters

URL parameters, also known as query strings, can result in duplicate content by producing several copies of the same page that search engines treat as separate web pages. This happens when a website utilizes URL parameters to generate URL variants that do not reflect distinct content.

When a website employs URL parameters like product filters to generate variants of a page's URL that do not reflect distinct content, search engines may treat these URLs as separate web pages, causing confusion and diluting the site's page authority.

WWW Pages vs. Non-WWW Pages

Websites that have a www page and a non-www page may experience a duplicate content issue. The reason for this is the same content will exist on both pages, and search engines may not view these websites as the same, so it will identify it as a duplicate content issue.

Content Scapers

Content scrapers, also known as web scrapers or content scraping bots, are automated tools or scripts designed to extract content from websites. These tools crawl through web pages, gather information, and often copy the content for various purposes.

Search engines will notice the duplicate content; however, they will not be able to tell which one is the original. In this situation, the search engine may not rank either website or choose the stolen one and ignore the pages for which you worked hard to produce content.

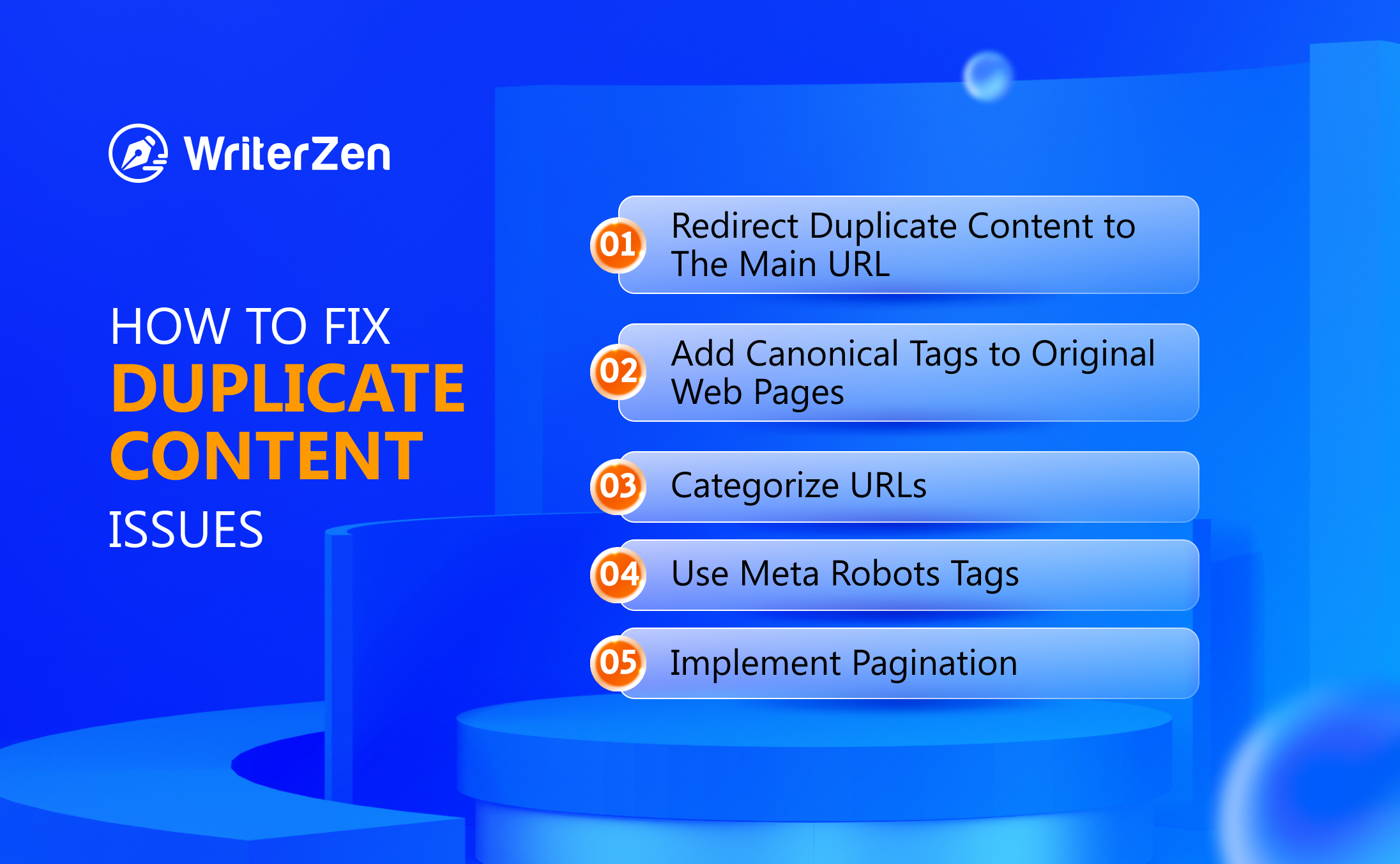

How to Fix It?

As mentioned above, there are quite a few reasons that can lead to duplicate content issues. Once you know what may be the cause of the duplicate content, it is quite a simple issue to fix.

But, if you are unsure about the cause, here are some methods that you should try to fix this issue:

Redirect Duplicate Content to The Main URL

By redirecting all duplicates of a page to the URL that search engines consider to be the primary or original version. You can do this with a 301 redirect, which informs search engines that the content has been permanently relocated to a new location.

You can boost your search engine ranking by merging the authority of all duplicated URLs into one primary URL.

Add Canonical Tags to Original Web Pages

A canonical tag is an HTML tag that tells search engines about the primary or preferred version of a webpage.

You can consolidate the authority of all duplicated URLs into one primary URL using canonical tags without diverting people to a new URL. This is very important if you want to keep several versions of a webpage that users can access.

Categorize URLs

By categorizing your URLs properly, you can prevent URL parameter issues, which might lead to duplicate content.

This includes making sure that each URL reflects a distinct piece of information and that any additional parameters provided in the URL don't modify the page's content. You can also utilize URL parameters to monitor user activity on your site, but make sure these URLs are not indexed by search engines.

Use Meta Robots Tags

These HTML tags tell search engines how they should view a site, including whether or not to index it. You can stop search engines from indexing multiple versions of a page by adding meta robots tags, which can help maintain the site's authority and increase your rankings in search engines.

Implement Pagination

If you have a lot of content that has to be displayed over multiple pages on your website, you can use pagination to break it into smaller, more manageable parts.

Pagination is the process of dividing content into logical sections and displaying them on separate pages with links connecting them. This can help prevent duplicate content problems that can occur if all the content is shown on one long page.

You can use this in combination with canonical tags to specify the recommended URL for each page of paginated material, which can help consolidate the authority of all the pages into one URL.

Final Thoughts

In conclusion, understanding the nuances of duplicate content is essential for anyone involved in online content creation and digital marketing. Duplicate content can have a significant impact on your website's performance in search engine rankings and user experience.

By comprehending what duplicate content is, why it matters, and how to avoid it, you empower yourself to create an online presence that not only adheres to search engine guidelines but also provides valuable and unique content for your audience.